NSLLM bridges LLMs and neuroscience

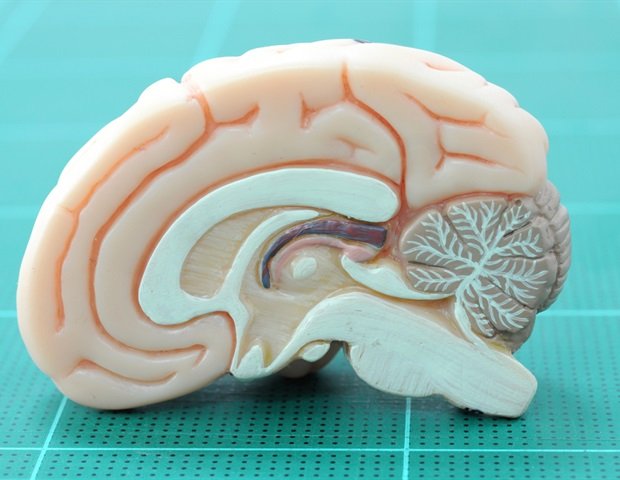

Large language models (LLMs) have become critical tools in the pursuit of artificial general intelligence (AGI). However, as the user base expands and the frequency of use increases, the development of these models incurs significant computational and memory costs, limiting their potential to serve as fundamental infrastructure for human society. Furthermore, current LLMs generally lack interpretation: opaque decision-making and optimization processes make it difficult to ensure reliability and fairness in high-risk areas such as healthcare and finance. In contrast, the human brain performs complex tasks with less than 20 watts of power, while exhibiting remarkable transparency in its cognitive processes. This stark contrast highlights the gap between LLMs and human knowledge and presents a double challenge: on the one hand, improving the computational performance of LLMs is necessary to improve energy efficiency and conserve resources. On the other hand, enhancing their interpretability is crucial for a better understanding of the interactions and functions of components in large-scale systems.

To overcome the cross-disciplinary bottleneck, this study proposes a unified framework that converts conventional LLMs into NSLLMs by performing integer measurement and binary spike conversion, while incorporating a spike-based linear attention mechanism. This framework bridges neuroscience and large language models, offering a platform for applying neuroscience tools to LLMs. By introducing integer training with binary inference, the outputs of standard LLMs are transformed into spike representations, allowing neuroscience tools to analyze information processing.

LLM ultra-low power hardware-software without MatMul

To validate the energy efficiency of the approach, the study implements a custom MatMul-free computing architecture for a billion-parameter scale model on an FPGA platform. Specifically, a level-based quantization strategy and hierarchical sensitivity metrics are used to evaluate the impact of each level on quantization loss, allowing the formulation of an optimal mixed-time-step spike model that achieves competitive performance under low-bit quantization. In addition, a quantization-assisted thinning strategy is introduced to reshape the membrane potential distribution and shift the quantization mapping probability toward lower integer values, significantly reducing the spike firing rate and further improving model performance. In the VCK190 FPGA, a MatMul-free hardware core is designed that completely eliminates the matrix multiplication operations in NSLLM, reducing the dynamic power consumption to 13.849 W and increasing the throughput to 161.8 tokens/s. Compared to an A800 GPU, this approach achieves 19.8× higher energy efficiency, 21.3× memory savings, and 2.2× higher inference performance.

Improved interpretability through spike neural populations

By converting the behavior of LLMs into neuronal dynamical representations—such as spike trains—through the NSLLM framework, we can analyze both their neuron’s dynamical properties (e.g., randomness quantified by Kolmogorov-Sinai entropy) and their information processing characteristics (e.g., Shannon entropy and mutual information). This allows a clearer interpretation of the computational roles played by NSLLMs. Experimental results show that the model encodes information more efficiently when processing unambiguous text, allowing it to distinguish between ambiguous and unambiguous inputs (for example, middle layers show higher normalized mutual information for ambiguous sentences. stronger information transmission ability. Furthermore, the positive correlation between mutual information and Shannon entropy suggests that layers with higher information capacity are better at preserving key input features. By incorporating the of neural dynamics with information theory measures, this framework provides a biologically inspired interpretability for LLM mechanisms while significantly reducing data requirements.

Neuroscience research has shown that the human brain achieves energy-efficient information processing through event-based sparse computations, improving both communication efficiency and system interpretability. Building on this principle, the team developed an interdisciplinary unified framework that introduces a neuromorphic alternative to traditional LLMs, while providing performance on par with similarly scaled mainstream models in common sense and a range of more complex large-model tasks such as reading comprehension, answering world knowledge questions, and mathematics. This framework not only advances the frontiers of energy-efficient artificial intelligence, but also offers new perspectives on the interpretability of large language models and provides valuable insights for the design of future neuromorphic chips.